Project Summary

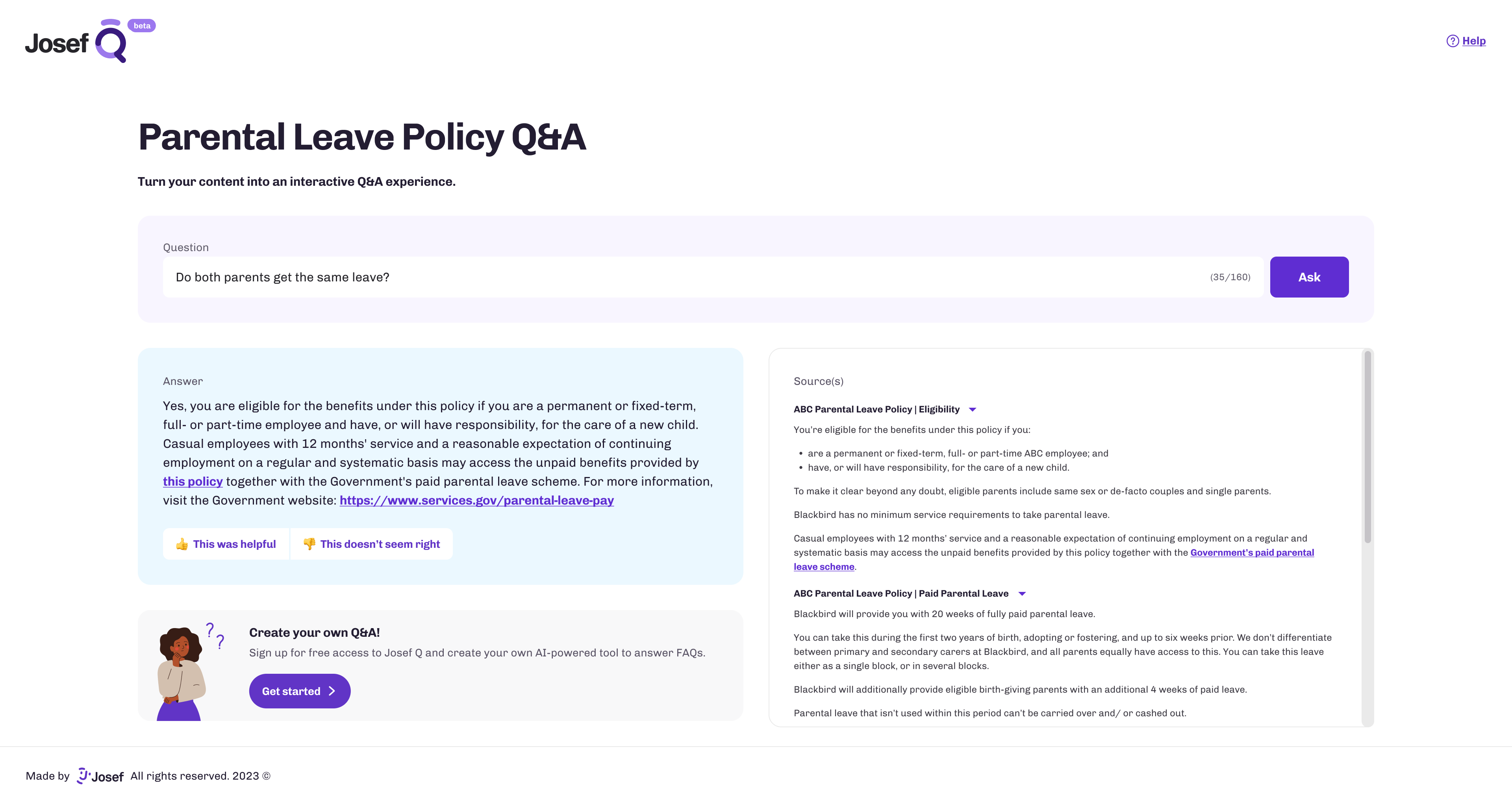

During my 1-year tenure at Josef, I led the design of a new GenAI tool that allows customers to create and fine-tune support and FAQ tools from their own documentation. This in turn allows the end-user to query lengthy and complex policies, standards, regulations, handbooks etc. simply and easily, thereby saving precious time and resources that would otherwise be lost to the manual process.

Discovery

Validation

As former lawyers themselves, with a wide network in the industry, the CEO and COO had already validated the need for this product in advance. My responsibility however was to conduct thorough research into the JTBD that this product could solve and the best ways to execute the solution. The main pressure here was time to market - we knew that many companies were attempting the same thing, including some of the world’s biggest tech companies such as Google and Microsoft and so it was important for Josef to be ahead of the curve.

Understanding AI technology

My first task was to understand the technology, its capabilities and weaknesses in relation to the problem we were trying to solve. This project began shortly after the advent of OpenAI’s ChatGPT, so as a team, we already had a rough idea of what could be achieved but we needed to know more. This involved taking a course in Generative AI and LLMs, and working very closely with the CTO as we continually experimented and created prototypes using this rapidly evolving technology.

Customer interviews

After we had made our first usable prototype, it was time to speak to potential customers to understand if the product provided an effective solution to their needs. In doing so, we discovered the critical need for response accuracy from the LLM. GenAI is known to frequently ‘hallucinate’ in its responses which obviously poses a significant risk to legal professionals, for whom such inaccuracies could leave them dangerously exposed.

Jobs To Be Done

Our interviews also allowed us to identify a few key use cases and personas for this product. These were primarily lawyers, who needed to quickly get answers from large legal documents and in-house corporate legal and HR teams that wanted to understand (and help their employees) understand company policies.

Ideation

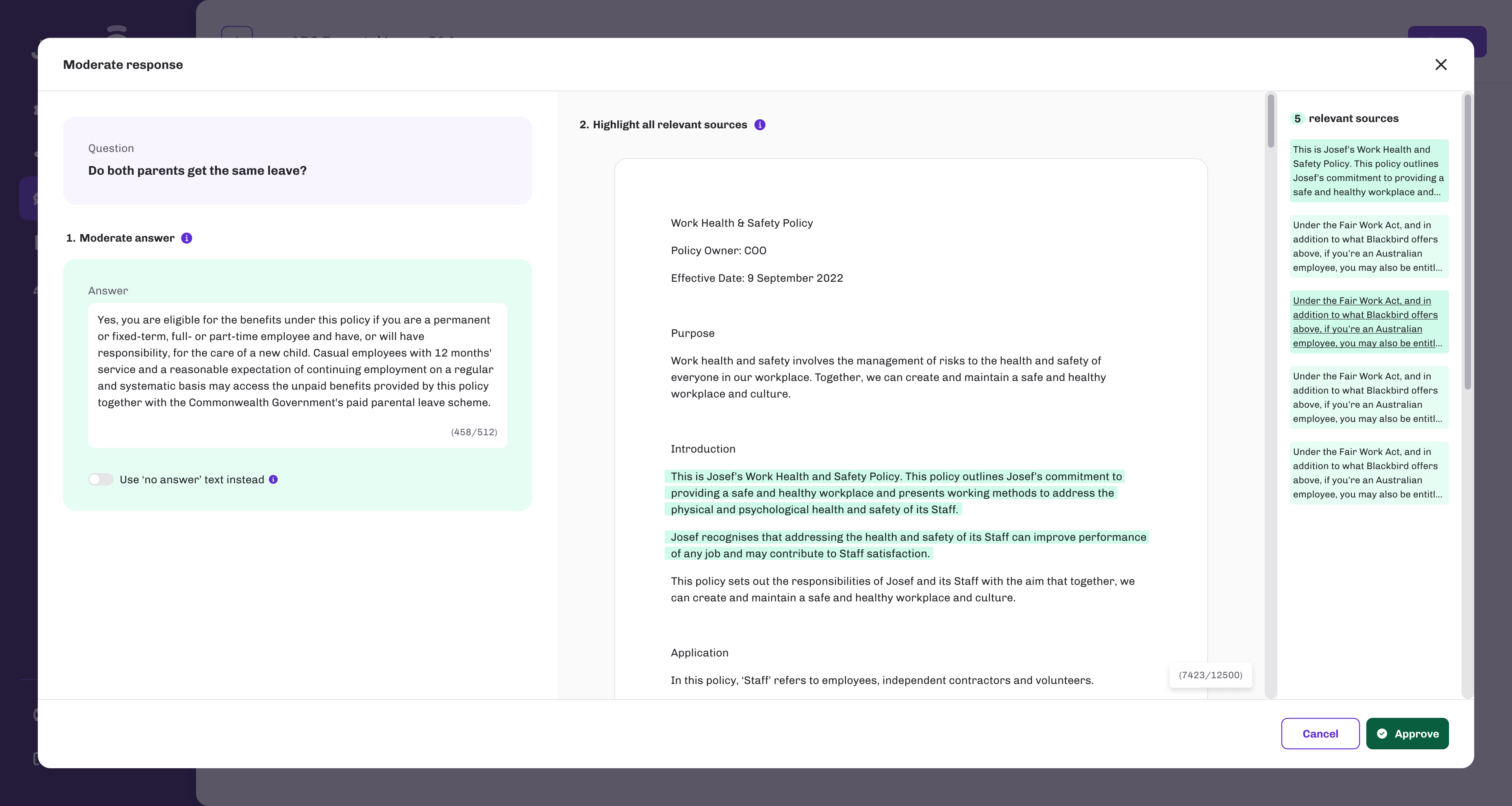

Human-in-the-loop

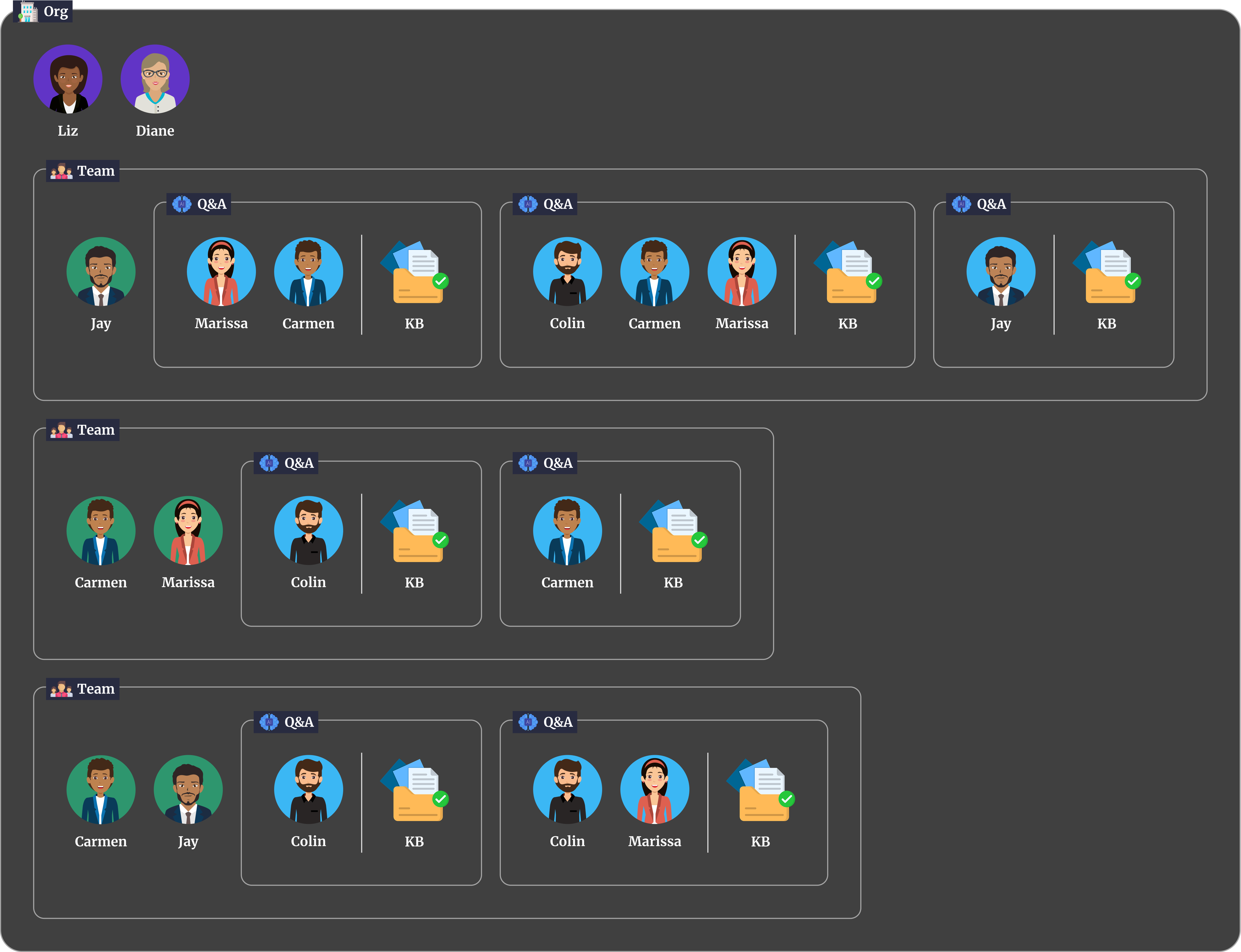

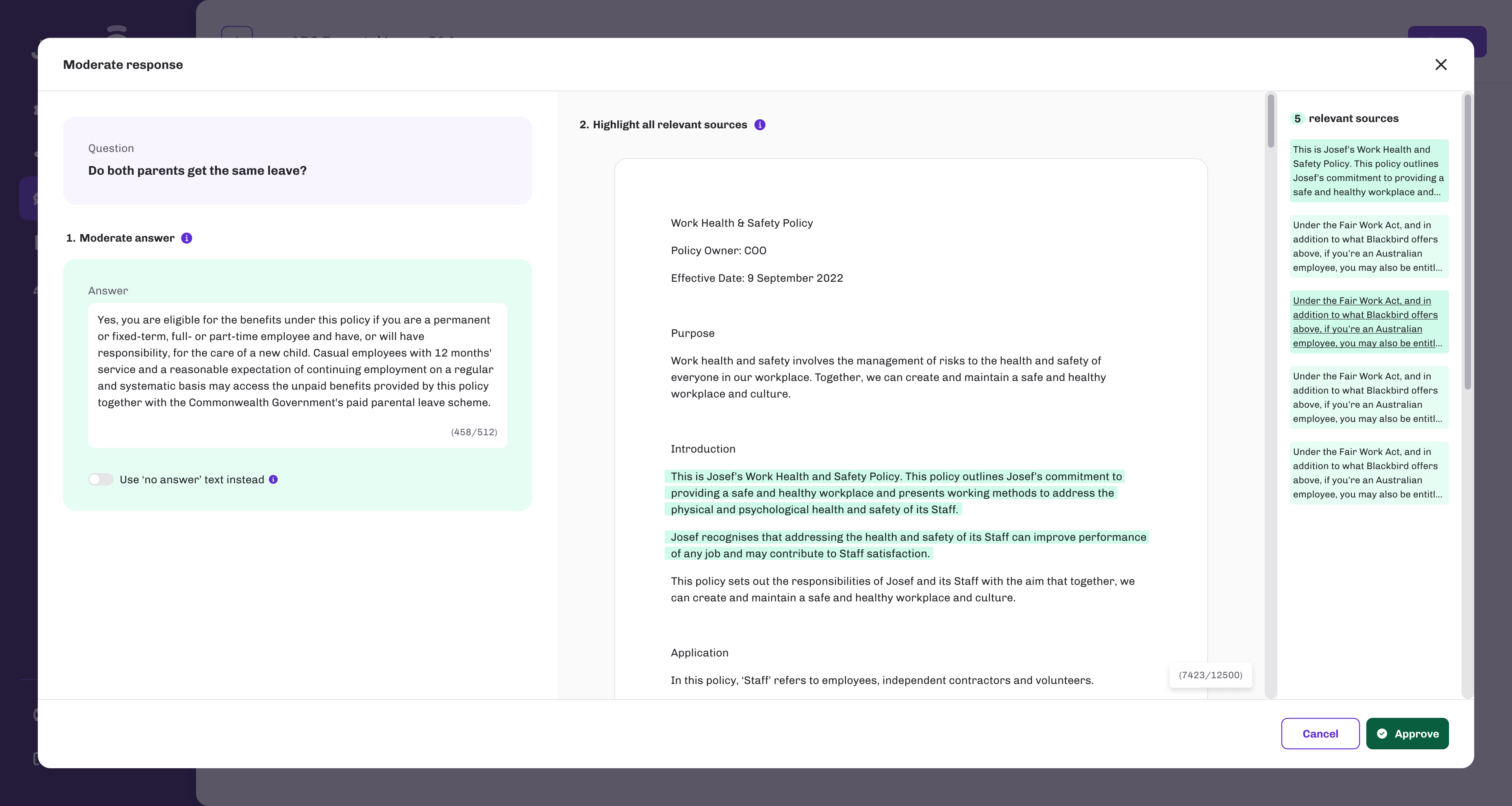

One of the key challenges identified in discovery was the need to make the tool 100% accurate and eliminate the possibility of inaccuracy. Our solution was to enable ‘human in the loop’ training that would allow admins to moderate and train the AI responses. We also identified in our interviews that the ‘admins’ of these tools were not necessarily always the subject matter experts of the content itself. Therefore it was important to include additional ‘editorial’ privileges for some users.

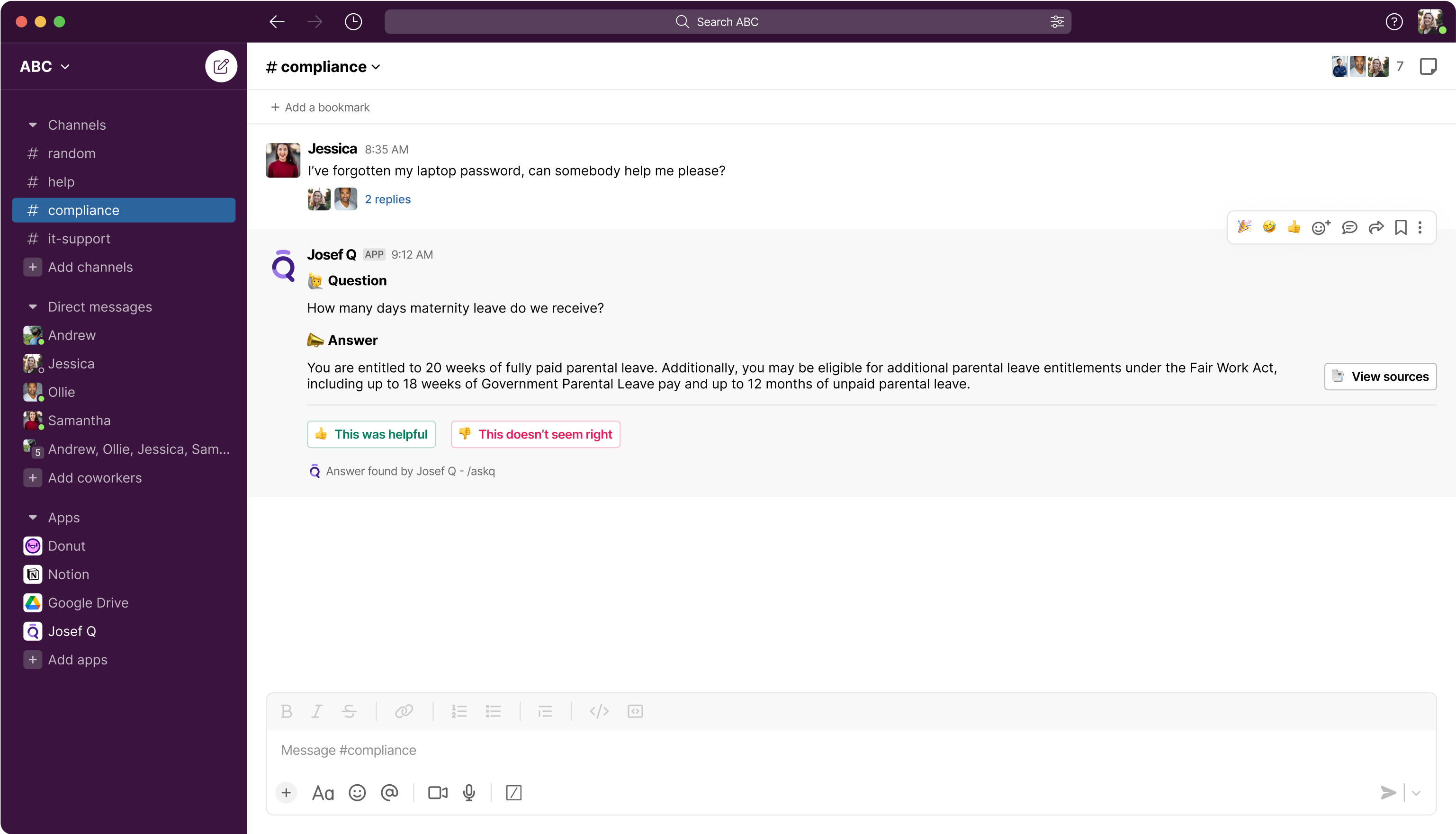

Feedback loop

In order to help understand how accurate and useful the LLM’s answers were to user’s questions, we felt it important to ask them for feedback to responses. This data would then inform admins and editors of the tools about which questions and answers required more ‘human in the loop’ training.

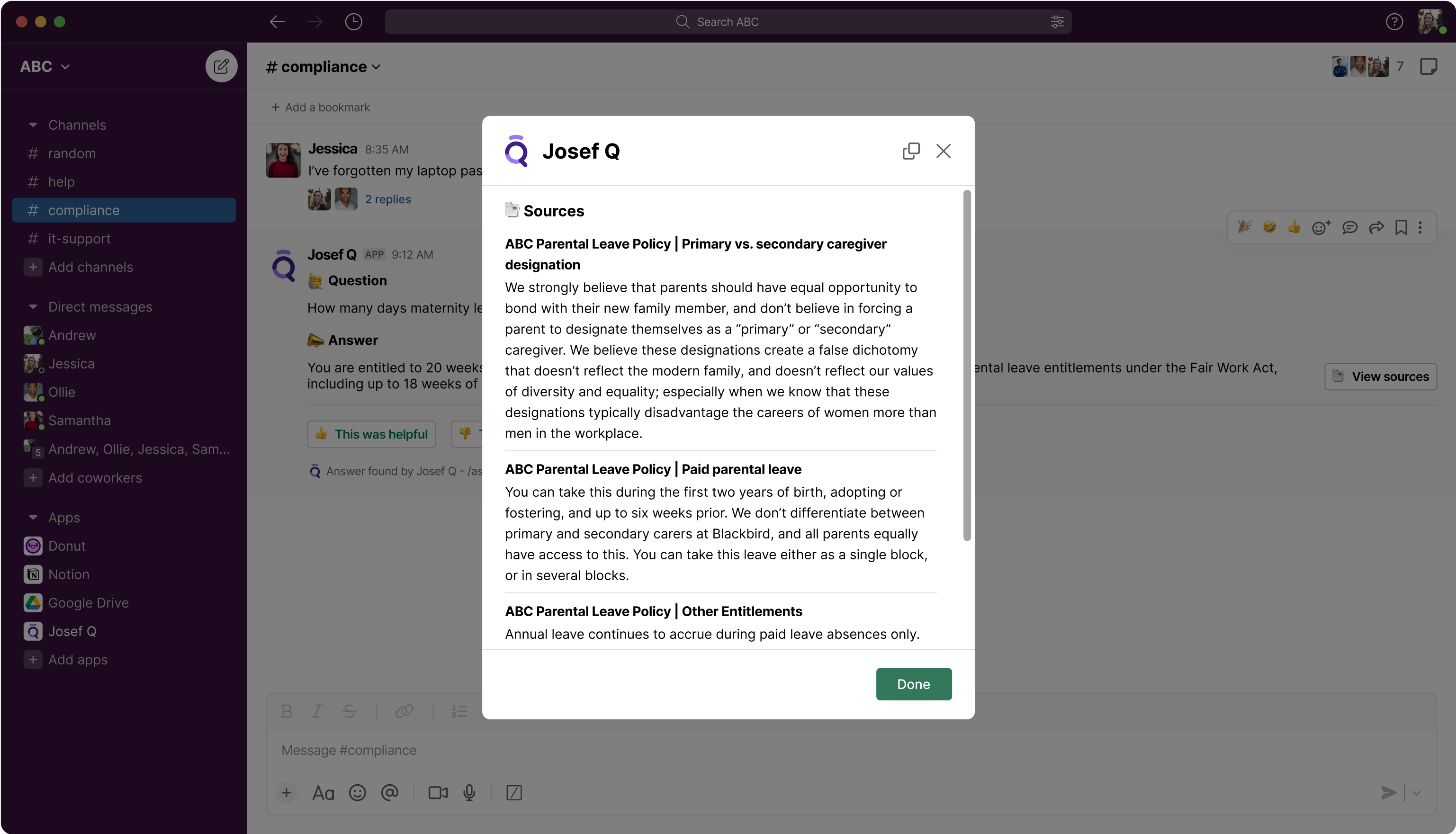

Peace of mind

It is extremely important for product adoption that users are able to trust their tools. To provide peace of mind, we included ’sources’ in AI responses that would allow users and admins to verify where in the original knowledge base the AI had sourced the information it had used to generate the response.

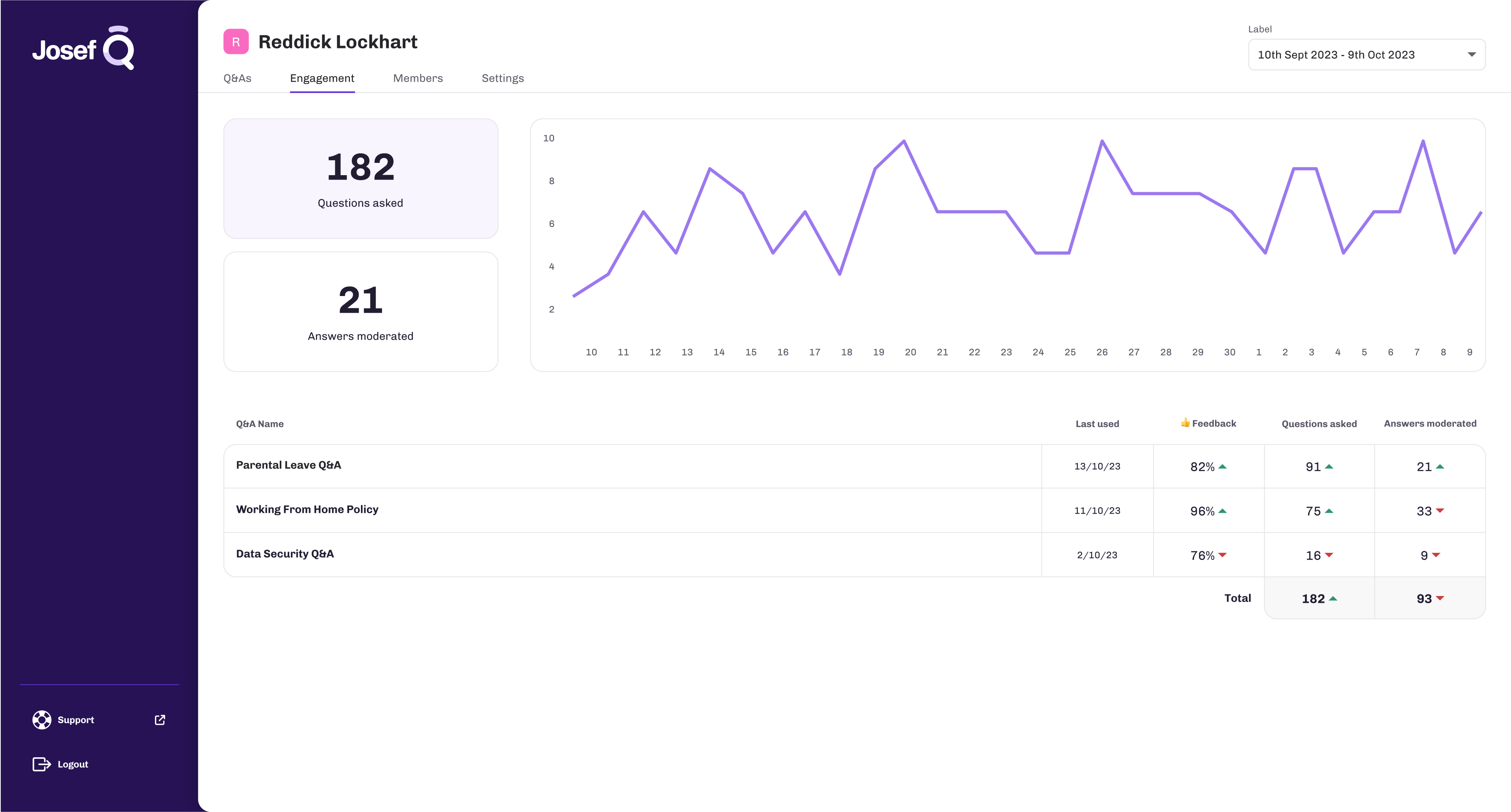

Reporting

Another helpful feature that was identified was some basic analytics. This would allow tool creators to spot trends in the most commonly asked questions and help them understand where more clarity was needed.

Meet the user where they are

Having a standalone product is OK but to facilitate adoption we decided to prioritise third party integrations that would allow end users to access these Q&A tools inside existing software that they already use, such as Slack and Microsoft Teams.

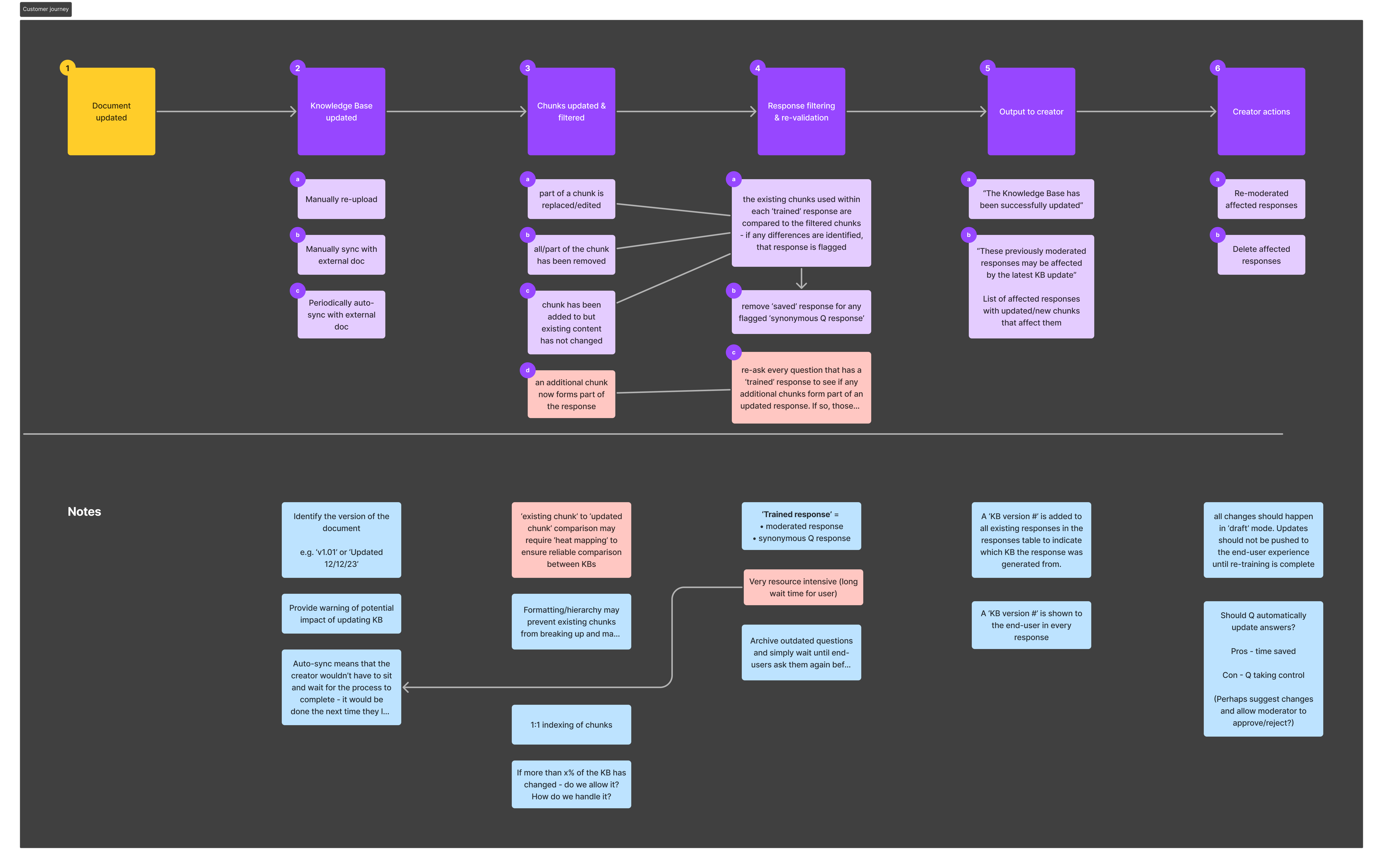

Dynamic knowledge base

To prevent owners from having to start again every time their knowledge base needed to be updated, we introduced ‘dynamic knowledge bases’. This would allow them to upload a new document, but only update the knowledge base with sections that are different to the original, thereby preserving existing training and responses.

Delivery

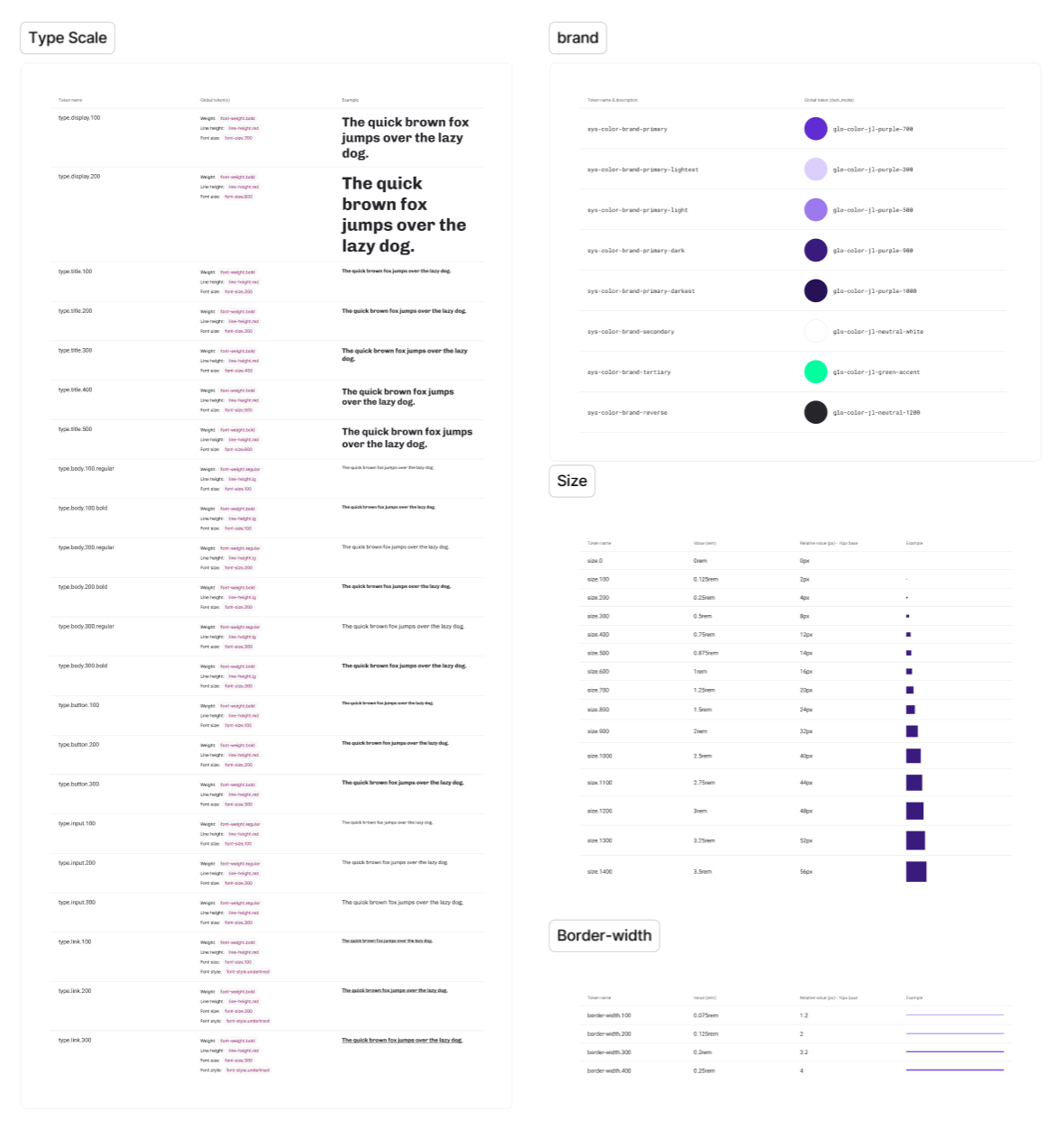

Working closely with the CTO and CEO, we prioritised and sequenced the design and build of the first MLP release with a small controlled pilot group of potential customers in mind, from whom we could ask for early feedback. I created the designs in Figma while simultaneously creating and referencing a new component library that could be supported by the ‘Bootstrap’ framework.This allowed us to deliver at speed.

Result

We continued to take on feedback over the following 6 months, iterating with additional features and improving UI and usability. This eventually led to the excitement of our first major corporate client - MasterCard. By the end of the first year we had reached our milestone of a forecasted $100k ARR.